In a lengthy comment on my pragmatic speculative realism post, philosopher Levi Bryant asks what issues in technology and media studies prompted my interest in object-oriented ontology. I’d like to try to answer the question for the benefit of readers finding their way here from sources in philosophy rather than game studies.

In some ways, I think I was doing object-orieted ontology before I even knew it. The single observation I tried to make in my first book, Unit Operations, is that formal discreteness ought to be understood as the primary mode of production, both across and within media (this idea owes much to systems theory and complexity theory, but does not limit itself to living systems like social systems or ecosystems). I used the word “unit” (“a unit is a material element, a thing”) to avoid the term collision with computing I discussed recently, but the spirit of what I had in mind is much the same as what Graham Harman means when he talks about objects, and I made that connection explicitly on page 5 of the book. (In fact, Graham and I have been corresponding on and off since soon after its publication in 2006.)

(Sidenote: even though Graham has taken me to task for using Badiou’s set theory ontology as a tool, I think my application of Badiou in Unit Operations takes a much more object-oriented tack than would the simple invocation of the event and the count-as-one. But we’ll save that debate for another time.)

As a former Lacanian psychoanalyst familiar with cultural studies, Levi makes the following primary observation in his comment:

My sense is that “cultural studies” has been far too dominated by what I generically refer to as “semiotic” approaches. That is, we get a lot of emphasis on interpretation and analysis of signs and cultural phenomena, and the rest falls by the wayside.

This seems generally right to me, given an important provision. There are humanists who consider the materiality of creativity, but when they do so they usually do it from an historical perspective (e.g., the history of the book, itself a rare and possibly ostracised practice in literary scholarship), or from a Marxist perspective (i.e., with respect to the human experience of the material production of artifacts and systems). In both of these cases, the primary—perhaps the only—reason to consider the material underpinnings of human creativity is to understand or explain the human phenomena of creation and interpretation. Clearly there has been a conflation of realism and materialism in cultural studies for decades now, such that it’s very easy to do the latter without the former.

In Unit Operations, I was focused on comparative criticism, particularly criticism that would be able to treat very different sorts of media, like literature and videogames, in tandem. As someone with experience both experiencing and creating computational work, it was always clear to me that the material underpinnings of such systems were both important for anyone who wants to understand them with any depth, and yet also frequently ignored. For example, one topic I discuss in Unit Operations is the relationship between the shared hardware of different videogames, and how such relations cannot be sufficiently explained through cultural theoretical notions like intertextuality or the anxiety of influence. This is an approach that would come back as a primary method in my work with Nick Montfort on platform studies, discussed below.

It is also a sentiment that agrees strongly with another of Levi’s observations:

The idea seems to be that the medium is irrelevant—functioning only as a carrier (vehicle) of signs—and contributes nothing of its own.

One of the clearest disagreements I remember having along these lines was with well-known media scholar (and friend) Henry Jenkins. In his very successful book Convergence Culture, Jenkins is so determined to correct the misperception that convergence is a technological phenomenon (the single media device, or what he calls the black box fallacy), that he throws the baby out with the bathwater, treating all media as mere delivery systems. In my review of the book, I noted that something is washed away prematurely in this rush to open the cultural floodgates:

Technologies—particular ones, like computer microprocessors, mobile devices, telegraphs, books, and smoke signals—have properties. They have affordances and constraints. Different technologies may expose or close down particular modes of expression.

As I have thought about these topics more, I am coming to realize that the issues I was facing in game studies were really just extremely amplified versions of a sickness that faces cultural studies more broadly: a disinterest in and a distrust of explanations of the real. Chalk it up to C.P. Snow’s two cultures problem if you’d like, but it’s undeniable that few successful humanities scholars possess both experience with and interest in matters of science and technology—practical matters that would allow them to theorize smartly about such topics. Even in Science and Technology Studies (STS), it is common to hold the actual science and technology somewhat at arms length in order to focus on the human aspects of its use, and the “policiing” of that practice.

Here again I find myself agreeing with Levi:

Here, I think, the charge of “technological determinism” is rather stupid and reactionary. The point isn’t that technology determines particular social phenomena, but that it plays an organizing role.

In an interview with Paul Ennis that appeared the day following Levi’s comments, Graham Harman notes that Marshall McLuhan is not taken as seriously as he should be, as a philosopher. Perhaps part of my dissatisfation with cultural studies in general and game studies in particular can be summarized by a simple pointer to McLuhan: the properties of a medium really do matter, and thinking that attending to them amounts to technological determinism is a perverted and backward mistruth.

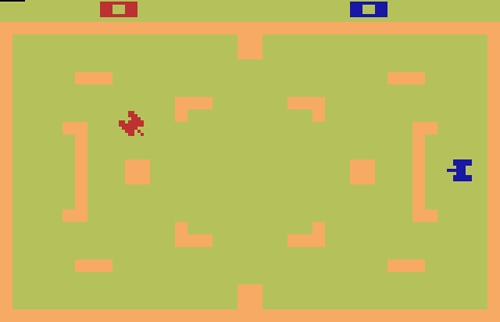

My colleague Nick Montfort and I tried to tackle this problem head-on, by example rather than rhetoric. We wrote a book, Racing the Beam, about the 1977 Atari Video Computer System, the first popular interchangeable cartridge videogame system, which inaugurates an approach we call Platform Studies. The book is also the first in a book series by that name that Nick and I are co-editing at the MIT Press. As we explain on the series website, platform studies invites authors to investigate the relationships between the hardware and software design of computing systems and the creative works produced on those systems. It’s a book about human creators and players and culture to be sure, but it’s also a book about objects—microprocessors, faux-wood paneling, CRT displays, RF adapters, and so forth.

An example: the horizontal symmetry apparent in many Atari VCS games could be attributed to trends in the history of art or as a reference to the bilateral symmetry native to earthly animals. But such a response would fail to take into account the fact that the production of symmetry on the device is a convenience afforded by its hardware design, which provides memory-mapped registers capable of storing 20 bits of data for a screen-worth of low-res background graphics 40 bits wide. Doubling or mirroring the left half of the screen involves a single assembly instruction that flips a bit on another register. That convenience was further inspired by the way people conceived of videogames at the time. In this case, the relationship between design, material constraint, and individual expression is complex, irreducible to appeals to any one factor alone. The 20 bits of storage in registers PF0, PF1, and PF2 do not determine the aesthetics of a game like Combat, nor are they simple constructions of social practices.

I think these problems of realism are much easier to see (and possibly to correct) in digital media and game studies than in other forms of media studies, because we deal with complex machines that have changed in massive and rapid ways over the past four decades. As such, it’s become harder and harder to remain credible while only looking at the surface effects of artifacts like videogames, the semiotic layer. Indeed, the very semiotic possibilities in complex computer software like videogames are partially—sometimes wholly—unknowable without a fundamental knowledge of the material reality of those artifacts and the machines on which they are used.

That said, Nick and I still encounter a lot of resistance, so the task is hardly done. I suspect that some of this resistance comes from the frustration of ignorance, as humanists have been trained for so long to distrust the hard sciences and engineering (for a variety of reasons), and so they have not pursued interests in them both. There are exceptions of course (Kate Hayles, who was my dissertation adviser at UCLA, Alex Galloway, who is an adept theorist and programmer, etc.). But generally, “interdisciplinarity” in the humanities has meant “French and German.”

At the end of his comment, Levi suggests a conference or journal or collection on these issues, a project crossing disciplines. I’m more than game, as it were, and I’d add that I find it liberating and surprising that the easiest support I’ve found for my interests in technology as objects has come from two places: first, electrical engineering, and second, philosophy. Rudimentary common interests in formal logic aside, that’s clearly evidence that satisfying approaches will come from unusual perspectives, with mutual respect.